SalsaNext: Optimized for Qualcomm Devices

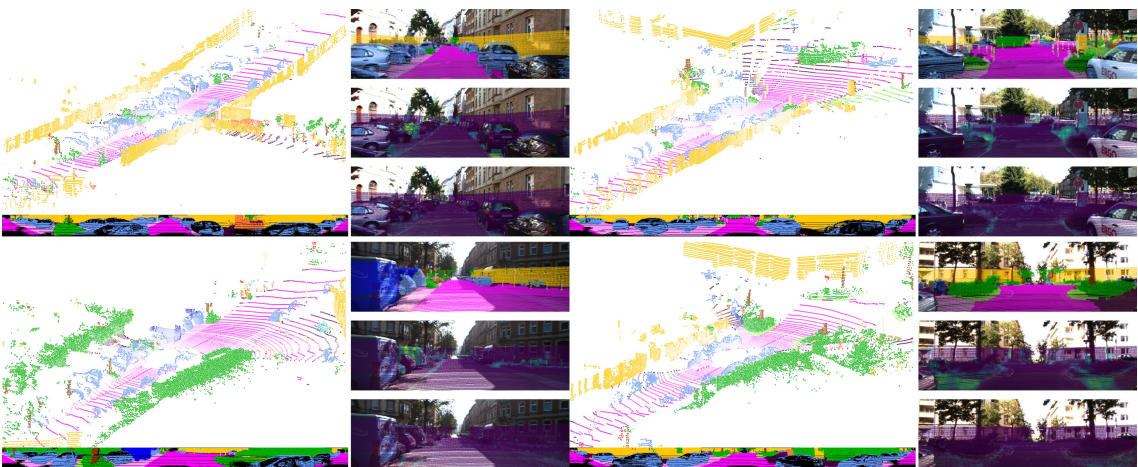

SalsaNext is a LiDAR-based model designed for efficient and accurate semantic segmentation.

This repository contains pre-exported model files optimized for Qualcomm® devices. You can use the Qualcomm® AI Hub Models library to export with custom configurations. More details on model performance across various devices, can be found here.

Qualcomm AI Hub Models uses Qualcomm AI Hub Workbench to compile, profile, and evaluate this model. Sign up to run these models on a hosted Qualcomm® device.

Getting Started

There are two ways to deploy this model on your device:

Option 1: Download Pre-Exported Models

Below are pre-exported model assets ready for deployment.

| Runtime | Precision | Chipset | SDK Versions | Download |

|---|---|---|---|---|

| ONNX | float | Universal | QAIRT 2.37, ONNX Runtime 1.23.0 | Download |

| QNN_DLC | float | Universal | QAIRT 2.42 | Download |

| QNN_DLC | w8a16 | Universal | QAIRT 2.42 | Download |

| TFLITE | float | Universal | QAIRT 2.42, TFLite 2.17.0 | Download |

For more device-specific assets and performance metrics, visit SalsaNext on Qualcomm® AI Hub.

Option 2: Export with Custom Configurations

Use the Qualcomm® AI Hub Models Python library to compile and export the model with your own:

- Custom weights (e.g., fine-tuned checkpoints)

- Custom input shapes

- Target device and runtime configurations

This option is ideal if you need to customize the model beyond the default configuration provided here.

See our repository for SalsaNext on GitHub for usage instructions.

Model Details

Model Type: Model_use_case.semantic_segmentation

Model Stats:

- Model checkpoint: SalsaNext

- Input resolution: 1x5x64x2048

- Number of parameters: 6.71M

- Model size (float): 25.7 MB

Performance Summary

| Model | Runtime | Precision | Chipset | Inference Time (ms) | Peak Memory Range (MB) | Primary Compute Unit |

|---|---|---|---|---|---|---|

| SalsaNext | ONNX | float | Snapdragon® X Elite | 33.24 ms | 33 - 33 MB | NPU |

| SalsaNext | ONNX | float | Snapdragon® 8 Gen 3 Mobile | 27.18 ms | 27 - 241 MB | NPU |

| SalsaNext | ONNX | float | Qualcomm® QCS8550 (Proxy) | 34.597 ms | 20 - 40 MB | NPU |

| SalsaNext | ONNX | float | Qualcomm® QCS9075 | 40.745 ms | 23 - 25 MB | NPU |

| SalsaNext | ONNX | float | Snapdragon® 8 Elite For Galaxy Mobile | 21.369 ms | 23 - 155 MB | NPU |

| SalsaNext | ONNX | float | Snapdragon® 8 Elite Gen 5 Mobile | 15.944 ms | 26 - 164 MB | NPU |

| SalsaNext | QNN_DLC | float | Snapdragon® X Elite | 30.095 ms | 3 - 3 MB | NPU |

| SalsaNext | QNN_DLC | float | Snapdragon® 8 Gen 3 Mobile | 22.357 ms | 0 - 261 MB | NPU |

| SalsaNext | QNN_DLC | float | Qualcomm® QCS8275 (Proxy) | 116.123 ms | 1 - 176 MB | NPU |

| SalsaNext | QNN_DLC | float | Qualcomm® QCS8550 (Proxy) | 30.828 ms | 3 - 5 MB | NPU |

| SalsaNext | QNN_DLC | float | Qualcomm® QCS9075 | 40.105 ms | 3 - 17 MB | NPU |

| SalsaNext | QNN_DLC | float | Qualcomm® QCS8450 (Proxy) | 55.424 ms | 0 - 296 MB | NPU |

| SalsaNext | QNN_DLC | float | Snapdragon® 8 Elite For Galaxy Mobile | 20.556 ms | 3 - 186 MB | NPU |

| SalsaNext | QNN_DLC | float | Snapdragon® 8 Elite Gen 5 Mobile | 15.21 ms | 3 - 191 MB | NPU |

| SalsaNext | QNN_DLC | w8a16 | Snapdragon® X Elite | 43.137 ms | 1 - 1 MB | NPU |

| SalsaNext | QNN_DLC | w8a16 | Snapdragon® 8 Gen 3 Mobile | 34.328 ms | 0 - 365 MB | NPU |

| SalsaNext | QNN_DLC | w8a16 | Qualcomm® QCS8550 (Proxy) | 44.739 ms | 1 - 4 MB | NPU |

| SalsaNext | QNN_DLC | w8a16 | Qualcomm® QCS9075 | 50.506 ms | 1 - 9 MB | NPU |

| SalsaNext | QNN_DLC | w8a16 | Qualcomm® QCS8450 (Proxy) | 82.609 ms | 1 - 424 MB | NPU |

| SalsaNext | QNN_DLC | w8a16 | Snapdragon® 8 Elite For Galaxy Mobile | 28.297 ms | 1 - 275 MB | NPU |

| SalsaNext | QNN_DLC | w8a16 | Snapdragon® 8 Elite Gen 5 Mobile | 19.552 ms | 1 - 314 MB | NPU |

| SalsaNext | TFLITE | float | Snapdragon® 8 Gen 3 Mobile | 22.149 ms | 8 - 277 MB | NPU |

| SalsaNext | TFLITE | float | Qualcomm® QCS8275 (Proxy) | 613.33 ms | 8 - 37 MB | GPU |

| SalsaNext | TFLITE | float | Qualcomm® QCS8550 (Proxy) | 30.525 ms | 0 - 17 MB | NPU |

| SalsaNext | TFLITE | float | Qualcomm® QCS9075 | 39.65 ms | 8 - 36 MB | NPU |

| SalsaNext | TFLITE | float | Qualcomm® QCS8450 (Proxy) | 56.073 ms | 10 - 310 MB | NPU |

| SalsaNext | TFLITE | float | Snapdragon® 8 Elite For Galaxy Mobile | 18.097 ms | 9 - 192 MB | NPU |

| SalsaNext | TFLITE | float | Snapdragon® 8 Elite Gen 5 Mobile | 15.303 ms | 10 - 198 MB | NPU |

License

- The license for the original implementation of SalsaNext can be found here.

Community

- Join our AI Hub Slack community to collaborate, post questions and learn more about on-device AI.

- For questions or feedback please reach out to us.